Retro Review: nVidia Geforce 256 DDR Part 3

3rd March 2025

In Part 2 of this retro review, we ran a bunch of 3D performance benchmarks on the Creative 3D Blaster Annihilator Pro. In Part 3, we'll swap out the hamstrung Pentium II-233 for something more period-correct: a Pentium III-800 "Coppermine", which was cutting-edge in November 1999.

Don't worry, some actual game videos are coming, but before we get there I want to see how the card performs under stress, and compare how it might perform on a low-to-mid range PC compared to a higher-end one for late 1999.

Still running on the AGP 2x bus ABit AB-BH6 motherboard with 128 MB of PC100 SDRAM, let's see how much the CPU was holding back this excellent card.

More Performance Testing!

Well, another little issue - this motherboard (which is Rev 1.02) is too old to recognise my PIII-800 even with the latest BIOS version "SS". I tried about 25 different settings using its Soft CPU II BIOS tool before realising Intel prevented overclocking on the Pentium III range, so you're stuck with just the FSB adjustment. The best I could get was 724 MHz with the 124 MHz(1/3) option. My BIOS setting for AGP Aperture Size was set to 64 MB - half the available system memory. The AGPCLK/CPUCLK ratio, which sets the AGP bus speed compared to the FSB speed, was set to 2/3, so its overclocking the AGP bus at about 82 MHz compared to the norm of 66 MHz. The only other setting I changed was the CPU core voltage, increasing it from the default 1.6V to 1.65V, as the Coppermine needs between 1.65 and 1.75V.

So here's how the GeForce 256 DDR ran on the Pentium III with the above settings via AGP 2x:

| Test | 3DMark '99 | 3DMark 2000 |

|---|---|---|

| 800 x 600 x 16-bit, 16-bit Z-buffer, triple frame buffer | 5907 3DMarks, 9616 CPU Marks, 54-64 fps | 5477 3DMarks, 376 CPU Marks, 35-121 fps |

| 800 x 600 x 32-bit, 16-bit Z-buffer, triple frame buffer | 5277 3DMarks, 9560 CPU Marks, 51-55 fps | 4853 3DMarks, - CPU Marks, 34-99 fps |

| 1024 x 768 x 16-bit, 16-bit Z-buffer, triple frame buffer | 5263 3DMarks, 9654 CPU Marks, 49-57 fps | 4561 3DMarks, 373 CPU Marks, 33-99 fps |

| 1024 x 768 x 32-bit, 16-bit Z-buffer, triple frame buffer | 4284 3DMarks, 9535 CPU Marks, 41-44 fps | 3573 3DMarks, 373 CPU Marks, 33-99 fps |

| 1280 x 1024x 16-bit, 16-bit Z-buffer, triple frame buffer | 4227 3DMarks, 9599 CPU Marks, 41-44 fps | 3548 3DMarks, - CPU Marks, 32-72 fps |

| 1280 x 1024 x 32-bit, 16-bit Z-buffer, triple frame buffer | 3045 3DMarks, 9671 CPU Marks, 27-34 fps | 2267 3DMarks, - CPU Marks, 15-42 fps |

As expected, the overall scores drop significantly the higher the resolution and colour depth we go, showing that the card is less able to keep up and the CPU is not the bottleneck. As with the earlier tests, the Creative card didn't skip a beat. Very smooth indeed, high quality output, and decent frames per second.

SDR vs DDR Comparison

As mentioned in Part 1, I've also acquired an SDR-based Geforce 256 card, the Asus AGP-V6600 Deluxe. Back in 1999/early 2000, this was the most premium Geforce 256 you could buy - it was the only one to use SGRAM instead of SDRAM and also the only one to include onboard hardware monitoring via its Winbond W8371D chip. Because this is the Deluxe version it also came with S-Video In/Out and Composite In ports as well as VR glasses support. It retailed for $50 more than other Geforce 256 SDR cards.

Here's how the Asus compared to the DDR Geforce 256, running the Pentium III Coppermine at 744 MHz. This doesn't quite correlate with the CPU speed above, but I've made adjustments to approximate the percentage performance difference:

| Test | 3DMark '99 | 3DMark 2000 |

|---|---|---|

| 800 x 600 x 16-bit, 16-bit Z-buffer, triple frame buffer | 5654 3DMarks, 11537 CPU Marks, 51-63 fps, 79% | 5396 3DMarks, 353 CPU Marks, 37-121 fps, 98% |

| 800 x 600 x 32-bit, 16-bit Z-buffer, triple frame buffer | 4754 3DMarks, 11551 CPU Marks, 39-44 fps, 72% | 4404 3DMarks, - CPU Marks, 34-99 fps, 90% |

| 1024 x 768 x 16-bit, 16-bit Z-buffer, triple frame buffer | 4930 3DMarks, 11560 CPU Marks, 47-52 fps, 78% | 4547 3DMarks, 384 CPU Marks, 34-93 fps, 99% |

| 1024 x 768 x 32-bit, 16-bit Z-buffer, triple frame buffer | 3818 3DMarks, 11498 CPU Marks, 40-37 fps, 71% | 3129 3DMarks, CPU Marks, 23-60 fps, 88% |

| 1280 x 1024x 16-bit, 16-bit Z-buffer, triple frame buffer | 3980 3DMarks, 11438 CPU Marks, 39-40 fps, 83% | 3174 3DMarks, - 254 CPU Marks, 20-64 fps, 89% |

| 1280 x 1024 x 32-bit, 16-bit Z-buffer, triple frame buffer | 2746 3DMarks, 11499 CPU Marks, 25-31 fps, 74% | 1871 3DMarks, - CPU Marks, 13-35 fps, 83% |

It's interesting that the 3DMark 2000 CPU Mark came in lower on the Asus tests to the Creative tests further up, despite running the PIII at a slightly higher clock. Overall though, the card with single data rate SGRAM averaged about 24% slower 3DMarks than the DDR SDRAM card in 3DMark '99 benchmarks and 9% slower in 3DMark 2000 benchmarks. All the above tests were run on stock core and memory clock speeds.

The Asus drivers come with a tweak utility that easily allows you to ramp up the core from 120 MHz to 142 MHz and the memory from 166 MHz to 195 MHz (the 5ns SGRAMs equate to a rating of up to 200 MHz). I had to download Tweak v2.15, as the original Tweak v2.08 that came on my Asus driver CD failed to locate my display driver and would not run.

After gradually increasing both core and memory, the card demonstrated that it was very stable at the maximum overclock settings of 142 MHz core and 195 MHz memory. The GPU core temperature rose from 63°C with stock settings to 69°C at maximum overclock. At these overclocked settings it produced these 3DMark scores:

| Test | 3DMark '99 | 3DMark 2000 |

|---|---|---|

| 800 x 600 x 16-bit, 16-bit Z-buffer, triple frame buffer | 5536 3DMarks, 11409 CPU Marks, 52-60 fps | 5070 3DMarks, 182 CPU Marks, 33-124 fps |

| 800 x 600 x 32-bit, 16-bit Z-buffer, triple frame buffer | 5086 3DMarks, 11468 CPU Marks, 48-54 fps | 4517 3DMarks, - CPU Marks, 23-98 fps |

| 1024 x 768 x 16-bit, 16-bit Z-buffer, triple frame buffer | 5223 3DMarks, 11438 CPU Marks, 49-56 fps | 5003 3DMarks, 360 CPU Marks, 36-104 fps |

| 1024 x 768 x 32-bit, 16-bit Z-buffer, triple frame buffer | 4259 3DMarks, 11496 CPU Marks, 42-43 fps | 3850 3DMarks, - CPU Marks, 36-76 fps |

| 1280 x 1024x 16-bit, 16-bit Z-buffer, triple frame buffer | 4388 3DMarks, 11513 CPU Marks, 43-45 fps | 3544 3DMarks, 167 CPU Marks, 23-71 fps |

| 1280 x 1024 x 32-bit, 16-bit Z-buffer, triple frame buffer | 3214 3DMarks, 11430 CPU Marks, 30-35 fps | 2342 3DMarks, - CPU Marks, 17-44 fps |

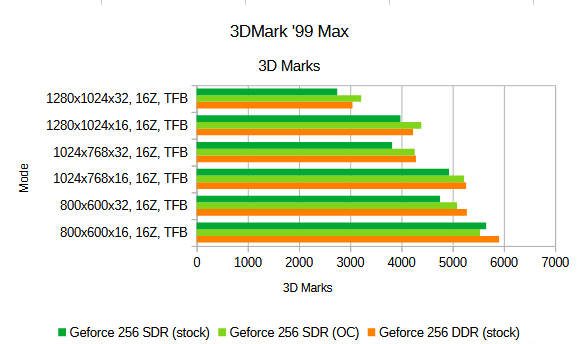

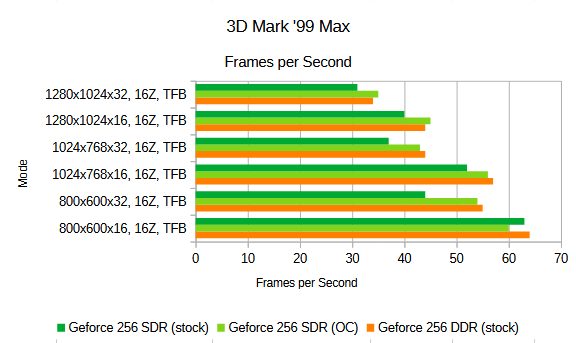

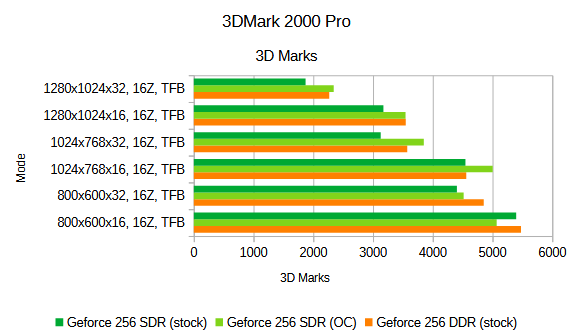

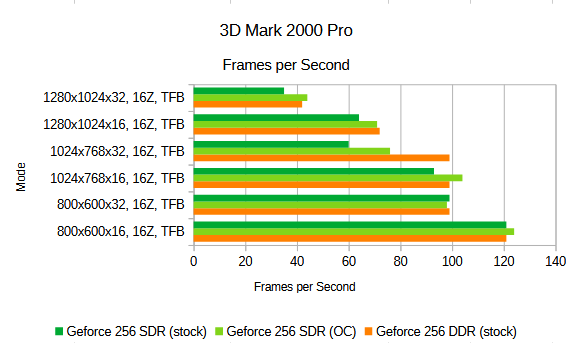

Here are all the results for comparison:

Let the Games Begin!

To truly see what the Geforce 256 DDR is capable of, I want to run a series of games released from the time. By 2003 a lot of games required DirectX 8.1 as a minimum, but some still worked with DirectX 7 that this card was designed for. Numerous games also worked with OpenGL. I've read online that some of the titles in my list supposedly run with this card but I'm not so sure.

The test rig looks like this: Windows 98 SE retail version, 128 MB PC100 SDRAM (a single module), Turtle Beach Santa Cruz PCI card, 80 GB Samsung hard disk, and NEC DVD-RW optical drive. On the Windows drivers side of things, I used the Creative Annihilator Pro Win98 driver version 4.12.01.0353 along with Microsoft DirectX 7. Other software/drivers: Windows 98 OHCI USB driver to get USB mass storage working for easier copying of some files and for frame rate detection I installed FRAPS v1.9d.

Here I used the Gefen converter/scaler and OBS 29.1.3 software for capture and recording. Looking back on these recordings, it's clear my OBS settings are incorrect - if you have knowledge of capturing video at different resolutions using OBS without scaling/compression/loss of quality and are willing to share, let me know!

DirectX 7 was used throughout the first round of testing. Then for any games that failed to work I updated DirectX to 8 and then 9.0b - my reasoning for this is that if the game simply checks the DirectX version when it starts, it will fall at the first hurdle even if the game doesn't make use of later DirectX features but the graphics card is still supported. It's worth noting that OpenGL is only a graphics library, whereas DirectX also has DirectInput, DirectSound, etc. So DX may still be needed at a certain version for a game to work even if you're using OpenGL for the graphics portion. The Creative control panel automatically has V-Sync disabled, so this was kept off for testing.

Here's a list of some games from 1999 to 2004 - fast or complex 3D games are what this card was designed for. This review won't go through them all, but we'll see how we go.

|

|

Unreal Tournament (1999)

Unreal Tournament, or UT as its commonly abbreviated, is a fun multiplayer first-person shooter. When this launched in 1999 we used to play multiplayer deathmatch in the office over our lunch break - the real challenge was knowing when to get back to work! The game has some nice minimum requirements of a Pentium 200, 32 MB of RAM, 515 MB of free disk space, DirectX 7 or OpenGL, 8 MB of video RAM, and a 4x CD-ROM.

Unreal Tournament intro

I ran the original game version 400 with DirectX 7. The first CD contains the main game, and the second CD contains S3TC (S3 Texture Compression) textures - naturally I didn't install these as they're only for compatible S3 graphics cards like the Savage3D. There are official patches that bring the game up to version 436, and there's also a Game of the Year (GOTY) Edition which contains the first three Bonus Packs from Epic Megagames - basically new levels and players. I didn't install any of these.

Playing in 'Direct 3D Support' mode (the alternative is software rendering) with World Texture Detail set to high, Skin Detail set to high, and both 'Show Decals' and 'Use Dynamic Lighting' enabled. Lending itself to its low system requirements, the game will run quite happily in resolutions ranging from 320 x 200 in 16-bit colour up to 1600 x 1200 in 32-bit colour. The game also supports hardware 3D audio (A3D or EAX), but in my tests I had this disabled.

A quick blast on the Oblivion level at various resolutions and colour depths:

|

640 x 480 (16-bit), 54-76 fps |

640 x 480 (32-bit), 42-76 fps |

|

800 x 600 (16-bit), 52-76 fps |

800 x 600 (32-bit), 34-54 fps |

|

1024 x 768 (16-bit), 41-74 fps |

1024 x 768 (32-bit), 23-49 fps |

|

1280 x 1024 (16-bit), 29-64 fps |

1280 x 1024 (32-bit), 20-47 fps |

UT ran incredibly well, even at 1280 x 1024 with all the other graphics options set to maximum. Probably the best compromise of image quality vs performance on this PIII (724 MHz effective clock speed) was at 1024 x 768 in 16-bit.

Image Quality: 5/5, Performance: 5/5, Fun Factor: 4/5

Max Payne (2001)

Arriving in July 2001, Max Payne introduced us to 'bullet time'. A great film noir-esque storyline and decent first person shooter, it was succeeded by two sequels after the original was met with critical acclaim. Max Payne had these minimum system requirements: 450 MHz Intel or AMD (700 MHz rec.), 96 MB RAM, DirectX 8.0a, and a 16 MB graphics card.

The game version here is v1.05. The SETUP program required that I install DirectX 8.0a at this point, and the full install used 826 MB of hard disk space. After this, it ran without any issues.

Max Payne intro

Medal of Honor: Allied Assault (2002)

MOHAA was the first Medal of Honor title to appear on the PC after its debut on the PlayStation, hitting the stores in January 2002. The game's minimum system requirements are a Pentium III / Athlon 500 MHz CPU, [Pentium III-700 is recommended], 128 MB of RAM, 1.23 GB of free hard disk space, a 16 MB OpenGL-capable graphics card, DirectX 8.0a or OpenGL, and an 8x CD-ROM.

My version of the game was the original 1.0, but I applied the last official patch, v1.11. This is automatically included if you install one of the two expansion packs: Spearhead or Breakthrough. MOHAA runs on the Quake 3 engine which requires an OpenGL-compatible graphics card, so the DirectX 8 requirement just isn't true. The game ran just fine on the first try with DirectX 7 installed.

Medal of Honor: Allied Assault intro

Allied Assault can run in resolutions from 512 x 384 up to 1600 x 1200, each with the choice of 16-bit or 32-bit colour depth. Further graphics options include texture detail of low/medium/high, texture colour depth (16- or 32-bit), and texture filter (bilinear or trilinear). A few extra touches include 'Wall Decals' and 'Weather Effects', as well as texture compression to increase texture quality. The game's graphics settings also has an Advanced page for further tweaking, including terrain detail, shadows, effects, dynamic lighting, volumetric smoke, etc. By default, the game starts you off in 640 x 480 x 32-bit with low texture detail, 16-bit texture colour depth and bilinear filtering. Wall decals are off but weather effects are on, and textures aren't compressed.

After you've completed the Basic Training exercise, the first mission will appear on the map: Arzew. The videos below are from this first mission, called 'Lighting the Torch':

|

640 x 480 (16-bit), 41-64 fps |

640 x 480 (32-bit), 30-48 fps |

|

800 x 600 (16-bit), 27-46 fps |

800 x 600 (32-bit), 34-54 fps |

|

1024 x 768 (16-bit), 24-40 fps |

MOHAA ran well, but beyond 1024 x 768 the frame rate dipped and it was starting to show, and this was with low detail and not many graphic options enabled. MOHAA is definitely showing its age, being very much an on-the-rails shooter.

Image Quality: 3/5, Performance: 4/5, Fun Factor: 3/5

Star Wars: X-Wing Alliance (1999)

Arriving in March 1999, X-Wing Alliance was the follow-up to TIE Fighter and X-Wing vs TIE Fighter, and would be the last in the series from Larry Holland. The game has these minimum system requirements: Pentium 200, 32 MB of RAM, 275 MB of free hard disk space, DirectX 6, a 4x CD-ROM, and a 2 MB video card.

The version that I installed is v1.2G. There are patches that update the game up to v2.02, which I installed. This latest update added the Film Room for playback of missions, support for Aureal 3D, improved DirectSound 3D support, and updates to some missions.

Star Wars: X-Wing Alliance intro

XWA can run in resolutions from 640 x 480 up to 1600 x 1200. Instead of being able to choose the colour depth, it gives you the ability to set texture resolution and explosion resolution to low, medium or high, as well as diffuse lighting and other effects. If using hardware acceleration, you can also change whether hardware MIP mapping is off, normal or high, use palettised textures, and enable/disable bilinear filtering

The game ran very well with the Geforce 256 card. At 800 x 600 with high texture resolution, high explosion resolution, and high hardware MIP mapping, the game was running at 20 to 30 fps.

|

800 x 600, 20 - 30 fps |

1024 x 768, 19 - 27 fps |

Click for Part 4 of this review.

.jpg)

.jpg)

.jpg)